Gesture glove to vocalize sign language

|

|

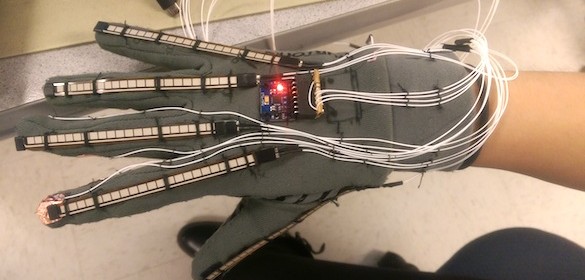

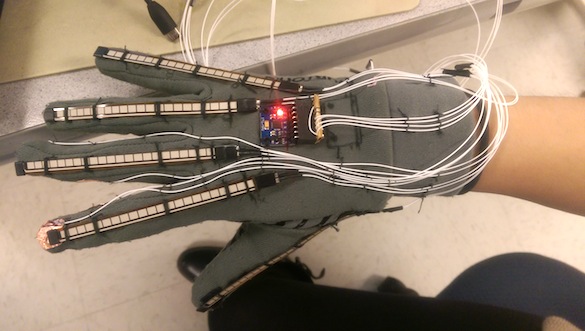

The Fall semester of 2014 is now over and we get to see some new and exciting embedded projects from students of Cornell published on Bruce Land’s ECE4760 class website. Monica Lin and Roberto Villalba have made a gesture glove for people with hearing disabilities. This device is intended to help them to better communicate with other people by converting their gestures into speech. For their project, they used five flex sensors to sense and quantify the bending of each finger, and the MPU-6050 (a 3-axis gyroscope and a 3-axis accelerometer) sensor to detect the orientation and rotational movement of the hand.

They write:

We designed and built a glove to be worn on the right hand that uses a Machine Learning (ML) algorithm to translate sign language into spoken English. Every person’s hand is a unique size and shape, and we aimed to create a device that could provide reliable translations regardless of those differences. Our device uses five Spectra Symbol Flex-Sensors that we use to quantify how much each finger is bent, and the MPU-6050 (a three-axis accelerometer and gyroscope) is able to detect the orientation and rotational movement of the hand. These sensors are read, averaged, and arranged into packets using an ATmega1284p microcontroller. These packets are then sent serially to a user’s PC to be run in conjunction with a Python script. The user creates data sets of information from the glove for each gesture that should eventually be translated, and the algorithm trains over these datasets to predict later at runtime what a user is signing.

And, don’t forget to watch their demo vide posted below.

|

|

Pingback: Gesture glove vocalizes sign language #WearableWednesday | Schneider vägguttag

Pingback: Gesture glove vocalizes sign language #WearableWednesday « adafruit industries blog